Posts by Joseph Stateson

|

1)

Message boards :

Questions and problems :

Only 1 out of seven projects reporting work

(Message 113127)

Posted 17 Nov 2023 by  Joseph Stateson Joseph Stateson

Post: I am attached to seven projects: I cannot remember the last time I got work from them. https://boinc.bakerlab.org/rosetta/server_status.php https://www.gpugrid.net/server_status.php Nothing to send Sign up to yoyo they have plenty WCG comes and goes. Climate is a waste of energy, CO2 levels drop any lower most life could end. We are about 417 and should be 10x that to turn everything green |

|

2)

Message boards :

The Lounge :

Windows 11 23H2?

(Message 113124)

Posted 17 Nov 2023 by  Joseph Stateson Joseph Stateson

Post: I'm just curious -- has anyone has upgraded their system to Win 11 23H2, and did they any problems with it. I upgraded 6 systems using the Rufus trick and 23H2. None of the systems were "blessed" by Microsoft. All were in-place upgrades. Lenovo S20: from 10->23h2 USB-3 PCIe adapter that was working in 10 no longer woke up from sleep. Solved by going to it's adapter power management and not allowing it to be turned off. MSI x229m-a: 22h2 -> 23h2 Search no longer worked: Solved by "restart the Start Menu Experience Host" "iqvw64e.sys" was banned by 23h2 so I just deleted it when I could not find any device using it. No Problem: HP Z400 Dell 435mt EVGA SLI 131-GT-e767 MSI Z270 SLI Plus All systems working fine. On the other hand, I replaced the broken motor for my car passenger window and it goes down instead of up and up instead of down and I am waiting to hear from the vendor if they sold me the wrong side door motor. |

|

3)

Message boards :

Questions and problems :

7.24.1: install dialog box blocks win11 taskbar search input

(Message 112729)

Posted 21 Sep 2023 by  Joseph Stateson Joseph Stateson

Post: Note sure if this is a windows 11 22h2 problem or in 7.24.1 New install, existing user account. When asking for password I tried to run a password app by entering the name of the app in the search tab. The search tab highlighted when I clicked the mouse but any text I typed in went instead into the password box of the Boinc manager. I had to close the username/password dialog box before I could run my password manager. |

|

4)

Message boards :

Projects :

World Community Grid needs help. can they get any here?

(Message 111697)

Posted 1 May 2023 by  Joseph Stateson Joseph Stateson

Post: A couple of weeks ago WCG did make an announcement that the stats pages would be out of synch for some time as they were having to rebuild a lot of users & computers stats data from scratch. IMHO, out of sync is not the same as missing devices. I have devices registered before 2019 that are crunching away but not listed. Users are posting their host ID's and the staff is forwarding the ID for manual entry into the database. That is NFG, and they need help. |

|

5)

Message boards :

Projects :

World Community Grid needs help. can they get any here?

(Message 111695)

Posted 1 May 2023 by  Joseph Stateson Joseph Stateson

Post: I just realized that only 4 of my 9 system show up as active over at WCG. The missing 5 system have been crunching away for several weeks with no problems indicated. Their forum is full of users reporting the same problem. Some users have 1000s of missing devices. Probably that charity engine gang.is worried about the gridcoins they are losing. There are several threads, all too long for me to go through. I was unable to find an official statement about the problem and what to do. |

|

6)

Message boards :

Projects :

News on Project Outages

(Message 111685)

Posted 29 Apr 2023 by  Joseph Stateson Joseph Stateson

Post: Milkyway@home is down. AGAIN. Yea, they seem to have problems and when they do come back up there is no mention of what went wrong. My AMD boards are only useful on Milkyway. Their superior DP float does not provide any benefit on Einstein. Asteroids had no OpenCL apps and Numberfield's OpenCL seem to run only on newer AMD cards with UEFI bios. When I asked about the problem, the admin did not know how the controlling XML was generated let alone how to fix it... There was a bad thunderstorm, heavy hail last night and I shut them all off. I may leave them off the whole summer. My nVidia boards run fine on all projects. I really miss SETI. |

|

7)

Message boards :

BOINC client :

"SSL Connect Error" BOINC 7.20.2 for Windows 10 22H2

(Message 111581)

Posted 15 Apr 2023 by  Joseph Stateson Joseph Stateson

Post: However, it would be nice if BOINC updated ALL_PROJECTS_LIST.XML to have the correct attachment url.David normally does this, but it requires that someone, project admin, trusted users etc., tell him about it. It can't do it on its own. Unless we add AutoGPT to BOINC and make BOINC sentient. 😂 I recall this was discussed some time ago. WUProp@home is not in the list because they did not bother to ask to have it put in. As to why 7.16 is working I am guessing it does not rely on windows to handle the certificate whereas 7.20 does and an expired cert somewhere triggers an error in 22h2 |

|

8)

Message boards :

BOINC client :

"SSL Connect Error" BOINC 7.20.2 for Windows 10 22H2

(Message 111579)

Posted 15 Apr 2023 by  Joseph Stateson Joseph Stateson

Post: But this is the message line that you only see when adding http_debug, otherwise it's silent. It's not the same one as the one where you add the project and BOINC then first (or second, or 100th) talks to the scheduler which checks what project URL is used. This URL is used by Curl, I think. You are probably correct. I did a "find" and https shows up on 22h2 systems that are attached correctly using http. However, it would be nice if BOINC updated ALL_PROJECTS_LIST.XML to have the correct attachment url. This is what I am guessing is happening and IANE on networks http and https difference is that ssl and tls certificates are checked when using https A connection is being made to wcg but it goes through a driver that has a problem. For example, when I enabled core isolation (an hour ago on a new system) I got an iqvw64e.sys driver error https://answers.microsoft.com/en-us/windows/forum/all/iqvw64esys-a-driver-cannot-load-on-this-device/dcc336f6-8815-4346-952b-fff97fe81523?page=2 That is a VPN driver, but I do not use VPN and never have and there is no problem with boinc on that system. I had to uninstall intel pro networking client to remove the driver and allow core isolation to be enabled. Possibly a connection to WCG goes through a problem driver on SoCrunchy's system. A good check is to enabled core isolation and see if any network driver has a problem I never got that app to work on my Dell system in 22h2. Dell let the certificate expire and was not interested in updating it. |

|

9)

Message boards :

BOINC client :

"SSL Connect Error" BOINC 7.20.2 for Windows 10 22H2

(Message 111577)

Posted 15 Apr 2023 by  Joseph Stateson Joseph Stateson

Post: I have 100's of these messages, they only go away after attaching using http JYSArea51 86 World Community Grid 4/14/2023 10:37:06 PM This project seems to have changed its URL. When convenient, remove the project, then add http://www.worldcommunitygrid.org/ 87 World Community Grid 4/14/2023 11:12:06 PM This project seems to have changed its URL. When convenient, remove the project, then add http://www.worldcommunitygrid.org/ 88 World Community Grid 4/14/2023 11:14:09 PM This project seems to have changed its URL. When convenient, remove the project, then add http://www.worldcommunitygrid.org/ 89 World Community Grid 4/14/2023 11:16:13 PM This project seems to have changed its URL. When convenient, remove the project, then add http://www.worldcommunitygrid.org/ EDIT: should have mentioned that I have several 22h2 systems, win 10 & 11 and show http as well as https (like you) and I do not have any problem connecting to worldcommunitygrid l |

|

10)

Message boards :

BOINC client :

"SSL Connect Error" BOINC 7.20.2 for Windows 10 22H2

(Message 111574)

Posted 15 Apr 2023 by  Joseph Stateson Joseph Stateson

Post: BOINC 7.20.2 on Windows 7 Pro SP1 64-bit works fine: l just noticed you are using https Detach and use http://www.worldcommunity The list in boinc folder is incorrect and shows https it should be http maybe this makes a difference in 22h2 just guessing edit: if "global_prefs:" has worldcommunity make sure it is http and not https |

|

11)

Message boards :

BOINC client :

"SSL Connect Error" BOINC 7.20.2 for Windows 10 22H2

(Message 111573)

Posted 15 Apr 2023 by  Joseph Stateson Joseph Stateson

Post: I had a similar problem upgrading to 22H2: a required driver had an expired certificate and "core isolation" declared it explicitly revoked. l was unable to run the app except on a pre-22h2 system. If you have core isolation enabled, then disabled it. if it is disabled then enable it. Look in the (boinc) event log and see if the error message changes when you make changes in core isolation. windows event log may show more info so you might check that. If is is not possible to enable core isolation, then click on "details" and see why it cannot be enabled. I had about 6 problem drivers. that I had to update or remove to enable core isolatiion. edit: changed protection to isolation. |

|

12)

Message boards :

Projects :

News on Project Outages

(Message 111477)

Posted 1 Apr 2023 by  Joseph Stateson Joseph Stateson

Post: Things have gone downhill ever since SETI folded up. I want over to BoincStats and made the following notes 150 Retired projects 43 active projects (unaccountably includding collatz!) 24 projects that have at least 1 work unit of data. Out of those 24 projects with data only 9 have enough to be candidates for the next crunching contest. Out of those 9 there are 2 that currently have data and statistics collection problems and another 3 that have their servers hosted in Russia. Does anyone have or know of a chart of BOINC project growth / loss? I am guessing a bell curve would show SETI at the top. If BOINC was a company there would be shareholders looking for new management. My 0.02c Sorry if I offended anyone. Feel free to delete this post or move it somwhere where management wont see it. |

|

13)

Message boards :

Projects :

certificate problem at asteroids at home

(Message 111267)

Posted 12 Mar 2023 by  Joseph Stateson Joseph Stateson

Post: It's all good now. Took 6 hours for all my completed tasks to finally upload. I had a huge queue when the cert expired. Problem was exacerbated by the WGC tasks that cannot upload yet. BOINC tried and failed each upload of WCG before going on to Asteroids. I should have requested a restart of the transfers for just Asteroids. I suspect all my WCG have passed their deadline. |

|

14)

Message boards :

Projects :

certificate problem at asteroids at home

(Message 111257)

Posted 11 Mar 2023 by  Joseph Stateson Joseph Stateson

Post: Cannot easily log in. Seems their certificate expired Unable to connect

An error occurred during a connection to asteroidsathome.com.

The site could be temporarily unavailable or too busy. Try again in a few moments.

If you are unable to load any pages, check your computer’s network connection.

If your computer or network is protected by a firewall or proxy, make sure that Firefox is permitted to access the web.

Neither windows nor ubuntu I can lot in using edge but have to cornfirm I know it is dangerous. Websites prove their identity via certificates, which are valid for a set time period. The certificate for asteroidsathome.net expired on 3/9/2023. Error code: SEC_ERROR_EXPIRED_CERTIFICATE |

|

15)

Message boards :

Questions and problems :

Not possible to recover from "unable to parse account file"

(Message 111007)

Posted 6 Feb 2023 by  Joseph Stateson Joseph Stateson

Post: I had a video card overheat and freeze the system. The card problem was fixed but after booting up the message log shows the unable to parse message for both the milkyway account and statistics xml files. In addition, there were 900 messages about "project not in state file". I assume one message for each of the 900 jobs waiting to run. The Milkyway job "txt" file was 43 mb in size. Both those xml files were empty although their size was not 0. Since the account files for milkyway are identical on all my systems, I copied over both an account file and a statistics file to replace the corrupted files. Although this "worked", all 900 jobs were marked as "abandoned" on the server and another 900 were downloaded. By "worked" I mean I did not have to delete the project and reattach it to fix the corrupted files There must have been more files corrupted other than the account and statistics. I am not sure why the account files was being re-written since it never changes unless the project changes their html. It seems to me the account file should be opened in read only mode. I am guessing it was opened in read-write mode and was still open when the graphics board hung. The account and statistics file for wuprop was also corrupted. I did not bother to copy those files over and the project disappeared completely after rebooting. I will have to add that project back in if I want it unlike milkyway. Since the 900 files were "abandoned" I assume they cannot be re-sent as a "ghost task" I am tempted to have the OS set the attribute of the account file to be read-only to prevent it from being corrupted. |

|

16)

Message boards :

Projects :

How can "Advances in GPUs make the BOINC program redundant" ?

(Message 110967)

Posted 26 Jan 2023 by  Joseph Stateson Joseph Stateson

Post: A quote from Peom at home wikipedia POEM@Home was a volunteer computing project hosted by the Karlsruhe Institute of Technology and running on the Berkeley Open Infrastructure for Network Computing (BOINC) software platform. It modeled protein folding using Anfinsen's dogma. POEM@Home was started in 2007 and, due to advances using GPUs that rendered the BOINC program redundant, concluded in October 2016.[1][2] The POEM@home applications were proprietary. I recall that poem did protein folding. Perhaps the "folding a home" project made poem redundant? Perhaps one of the Wikipedia editors here at BOINC can correct their conclusions. |

|

17)

Message boards :

GPUs :

OpenCL missing after Nvidia re-install: fixed a really strange way

(Message 110921)

Posted 15 Jan 2023 by  Joseph Stateson Joseph Stateson

Post: Hope this does not happen to anyone else. I am posting my solution in case someone needs it. background: I was debugging a problem with a video board and in the process lost OpenCL and Windows failed to boot and tried a repair I got window working after removing the defective video board. Obviously, this caused problems. BOINC was unable to process any work units requiring OpenCl. The log shows that OpenCL was available for the CPU and the Intel GPU but not for the Nvidia boards. However, the log showed that CUDA was still available and had the correct version and date. Running clinfo.exe showed the exact same thing as Boinc did: OpenCl only for the Intel CPU. I did a re-install of Nvidia driver 526.86 but that did not fix the OpenCL problem. I then got the latest 528.02 Nvidia and did a clean install but that did not fix the problem either. Googling I read that windows\system32\OpenCL.dll was where all the good stuff is kept. That Nvidia version was dated 2016. i replaced that dll with Nvidia OpenCL.dll dated 2022 from another system and rebooted. OpenCL is now working fine. Unaccountably, an install of latest Nvidia driver did not update that DLL. |

|

18)

Message boards :

Questions and problems :

BOINC calculation on custom event? API?

(Message 110919)

Posted 14 Jan 2023 by  Joseph Stateson Joseph Stateson

Post:

For what it's worth I finally got it to work using powershell as shown here |

|

19)

Message boards :

Questions and problems :

BOINC calculation on custom event? API?

(Message 110918)

Posted 14 Jan 2023 by  Joseph Stateson Joseph Stateson

Post: Hello there, As Ian&Steve C mentioned, you can use the BOINC command tool in any script. However, if you are only concerned about overheating the CPU or GPU then If running Windows, there is another option: you might consider using eFMer's tthrottle It slows down "pauses" the CPU or GPU when it detects too high a temperature. It does not control fans like MSI's Afterburner but is a good app to use as a failsafe. Your script can also be run under eFMer's boinctasks app. For example, you can set a boinctask rule to run your script when the temperature exceeds a certain value. Boinctasks can supply arguments to identify the project that is running hot and your script can handle the problem. There are also options in Boinctasks to do any of the following Allow new work No more work Resume network Resume project Run program Snooze Snooze GPU Suspend network Suspend project Suspend task based on any of the following conditions Elapsed Time CPU % Progress % Time Left Progress / min % Use Temperature Status Wall-dock Time Connection Deadline Elapsed Time CPU % Progress % Time Left Progress / min % Use Temperature Status Wall-dock Time Connection Deadline Time Left Project For example, you would select "temperature" and a value and then "Run Program" and enter the path to your script. At one time I had been sending a text message to myself if there was a problem but google made it difficult to script mail by requiring an app password for gmail. |

|

20)

Message boards :

Questions and problems :

Boinc.exe terminates at start on W10

(Message 110459)

Posted 15 Nov 2022 by  Joseph Stateson Joseph Stateson

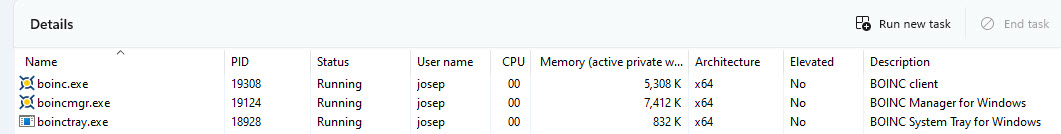

Post: It seems to work sometimes, actually now it is working, like every 3rd boot or something like that. When looking in the task manager for an app, be sure to look under "users" not just processes. It is possible for BOINC to be missing from processes but be running just fine under "users" When you installed BOINC I assume you installed for all users and did not do a service install As RobSmith mentioned you should not run BOINC in elevated mode especially if the manager is running in standard mode. You might want to verify that all 3 BOINC apps are NOT running in elevated mode.  Looking at your original post I spotted a few problem projects - setiathome is not operational and should be suspended or removed - cosmology at home no longer had a valid certificate. You might consider attaching to "universe" instead - einstein I recommend you detach from einstein and then use the elevated command prompt to make sure that all einstein files have been deleted from both "data" and "data\project" If you continue having problems I recommend you use a free tool such as revo uninstaller to uninstall boinc and revo's scan capability to find and delete all references. I would also use elevated mode to verify boinc is gone from the D drive. |

Next 20

Copyright © 2024 University of California.

Permission is granted to copy, distribute and/or modify this document

under the terms of the GNU Free Documentation License,

Version 1.2 or any later version published by the Free Software Foundation.