Thread 'PCI express risers to use multiple GPUs on one motherboard - not detecting card?'

Message boards : GPUs : PCI express risers to use multiple GPUs on one motherboard - not detecting card?

Message board moderation

Previous · 1 . . . 9 · 10 · 11 · 12 · 13 · 14 · 15 . . . 20 · Next

| Author | Message |

|---|---|

|

Send message Joined: 5 Oct 06 Posts: 5149

|

Nvidia drivers can decide to run OpenCL tasks in CUDA if they want...Yes. OpenCL is an intermediate level, cross-platform, programming language. Every manufacturer's driver - not just Nvidia's - compiles the OpenCL source code into machine code primitives to match the hardware in use. And since Nvidia's hardware runs CUDA primitives, that's what the Nvidia compiler implementation will be designed to output. It's not a 'decision' by the driver: it's a deterministic pathway defined by the programmer. |

|

Send message Joined: 8 Nov 19 Posts: 718

|

I never managed to make it work on my motherboards, those pcie splitters. |

|

Send message Joined: 24 Dec 19 Posts: 241

|

Why do you think that? Looks like it shows increments of 1% to me. Readings from my test bench show 0-10% and every value in between. Which is expected for the link speed/width and application being run. https://imgur.com/a/rhBWRqy |

|

Send message Joined: 8 Nov 19 Posts: 718

|

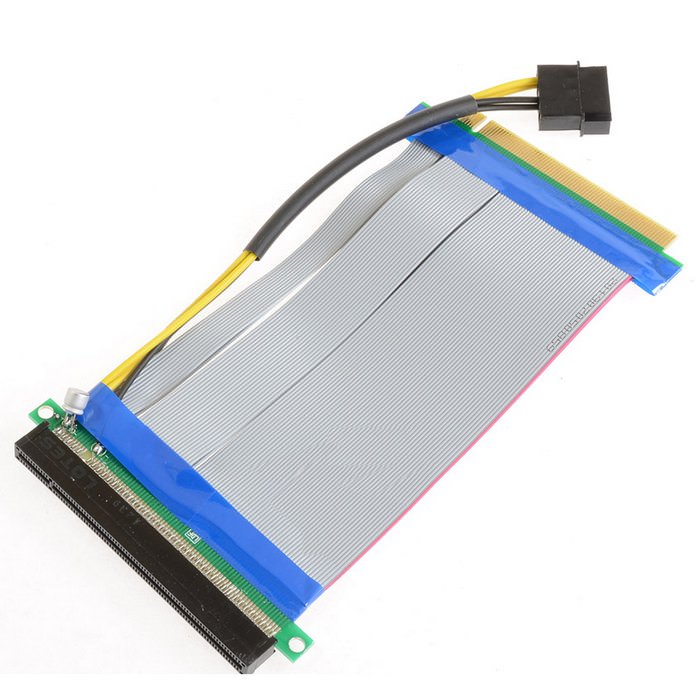

I never managed to make it work on my motherboards, those pcie splitters. I would say the opposite. Those PCIE x1 to 4 never worked for me. I use all the others; except my USB PCIE risers look like this:  and i use these:  |

|

Send message Joined: 24 Dec 19 Posts: 241

|

The problems with the grey cables is that they aren’t shielded and usually can’t handle PCIe gen 3 speeds depending on cable length and other sources of interference that might be nearby (like other unshielded PCIe cables, or power cables). The USB 3.0 cables (quality ones) are usually shielded enough for a stable PCIe 3 link over 1-3ft distances. But the USB cabled risers were really designed for crypto mining which has very low bandwidth requirements and can easily run at gen 1/2 with no penalty and so they usually come with cheaper low quality cables. I’ve used the 4-in-1 switch boards successfully for mining, but have limited use with them for BOINC crunching. They work OK provided you get a board that’s not defective. But you have to realize that a lot of these things were made cheaply with poor quality control to capitalize on the mining boom, so some work, some don’t. |

|

Send message Joined: 8 Nov 19 Posts: 718

|

Shielded risers aren't necessary for 12 inch or below risers. The weakness of the grey ones I use, is not static interference, but solder points breaking off (which is why they're doubled. |

|

Send message Joined: 24 Dec 19 Posts: 241

|

It really depends on the situation. I’ve had many many of those grey risers that can’t maintain gen 3 speeds, even those shorter than 12”. But they worked acceptably at gen 2 or gen 1, which have much less strict requirements for the signal integrity. It wasn’t just a case of broken connections. But weak connections are also a concern for them, especially the ones that have a power cable attached like in the pictures. It’s usually soldered by hand and covered in hot glue or something. Pretty easy to get one that’s defective and/or not made correctly, causing additional issues or frying your components. In general I won’t even use the grey ribbon risers anymore. |

|

Send message Joined: 8 Nov 19 Posts: 718

|

It really depends on the situation. I’ve had many many of those grey risers that can’t maintain gen 3 speeds, even those shorter than 12”. But they worked acceptably at gen 2 or gen 1, which have much less strict requirements for the signal integrity. It wasn’t just a case of broken connections. I never had a single issue with them, and have been using them for years. Gen 3, mostly PCIE 16x risers all feeding RTX GPUs. For x1 risers, I use USB. I do have a few x1 ribbon risers, but not the grey ones. (mine are shielded, and 12" or longer). I never tried out the x8 grey ribbon risers, but I did use the x4 risers for a while, until I had a replacement for those risers that allowed more flexibility. |

Tom M Tom MSend message Joined: 6 Jul 14 Posts: 90

|

+1 "Me too" :) I have had VERY good luck with them. Tom Tag. Your "it". |

|

Send message Joined: 24 Dec 19 Posts: 241

|

I finally found a project that very heavily relies on PCIe bandwidth, to a degree that running a USB riser on 3.0 x1 still isn't enough, much less a USB riser at lower PCIe specs. GPUGRID. ~38-40% PCIe use on a PCIe 3.0 x8 link, about 18-20% on PCIe 3.0x16. this means that anything less than PCIe [3.0 x4], [2.0 x8], or [1.0 x16] will likely be constrained My 2 high bandwidth systems are handling them well though. my fastest system has 10-GPUs total; 8-GPUs at 3.0x8, and 2-GPUs at 3.0x1 (USB). So I just excluded the 2 USB connected cards from running GPUGRID. |

|

Send message Joined: 5 Oct 06 Posts: 5149

|

How do you exclude certain GPUs on the same system from running a certain project?See the Client configuration - Options page in the User Manual. |

|

Send message Joined: 8 Nov 19 Posts: 718

|

I finally found a project that very heavily relies on PCIe bandwidth, to a degree that running a USB riser on 3.0 x1 still isn't enough, much less a USB riser at lower PCIe specs. I run GPUGRID with an RTX2080Ti through a PCIE x1 slot on Linux, and it seems to work reasonably well. It's a test right now, and I've had to lower power output from 300W to 200W, at which my GPUs run at 1500-1700Mhz instead of 2Ghz, but they seem to note 100% GPU utilization. Linux is much kinder to PCIE data. Pretty soon I'll up the wattage back to 225-250W, as I'll be removing 1 GPU tomorrow, just to be able to run the ones I have installed as optimal as possible. I don't use VM, just run it straight from a $15 64GB SATA SSD, and use F11 in Bios if I want to go back to the full blown Windows 10 on my other drive. |

Copyright © 2025 University of California.

Permission is granted to copy, distribute and/or modify this document

under the terms of the GNU Free Documentation License,

Version 1.2 or any later version published by the Free Software Foundation.