Thread 'PCI express risers to use multiple GPUs on one motherboard - not detecting card?'

Message boards : GPUs : PCI express risers to use multiple GPUs on one motherboard - not detecting card?

Message board moderation

Previous · 1 . . . 8 · 9 · 10 · 11 · 12 · 13 · 14 . . . 20 · Next

| Author | Message |

|---|---|

|

Send message Joined: 24 Dec 19 Posts: 241

|

Which GPU was it? What model? The heatsink, fan, etc should have been designed by Nvidia too. Just like Intel provide stock coolers for their CPUs. You can buy better ones, but you start with one that actually works, then buy quieter if necessary. Not always. Both AMD and nvidia have cards that they do not release a reference design for. Meaning they produce the GPU itself and let the AIB make their own PCB and cooling solution. And even on the CPU side, Intel and AMD both produce CPUs that they do not include a stock heatsink for. Or maybe Nvidia shouldn't make chips with such a high TDP that's is almost impossible to cool? Admittedly I have seen one AMD card like that - a dual GPU card. There is no way in hell you can cool two GPUs in that space. AMD is more guilty of this than nvidia. You’d know that if you’d actually compared nvidia and AMD cards on the same performance level and similar release date as nvidia. Example: (Relative performance) Model - TDP - Date (100%) AMD Radeon 7 - 295W - Feb 7th 2019 (106%) Nvidia 1080ti - 250W - Mar 10th 2017 (97%) Nvidia RTX 2070 - 175W - Oct 17th 2018 (99%) AMD 5700XT - 225W - Jul 7th 2019 AMD cards are less efficient, use more power, run hotter, and have more driver issues than comparable Nvidia cards. |

Joseph Stateson Joseph StatesonSend message Joined: 27 Jun 08 Posts: 642

|

This thread is really off topic. I am declaring it as a troll thread and am providing tools for identify abusers. My pick for Peter is the "Wank - o - meter". Rest of you can decide what your are

Simplified - Numeric

TROLL-O-METER

0 1 2 3 [4] 5 6 7 8 9 10

Simplified - Legend

T R O L L - O - M A T I C

PATHETIC ---------+---------INSPIRED

Simplified - with watchdog

0 1 2 3 4 5 6 7 8 9 10

+------------------------------+

|********************** |

|********************** |

+-----------------------------+

| (o o)

| -------oOO--(_)--OOo--------

Move along folks. Nothing to see here. Just a troll having a seizure. Show's over. Keep it moving.

TROLL-O-METER

1 2 3 4 5 6 7 8 9 +10 +20

|||||||||||||||||-|||||||

BULLSHIT-O-METER

1 2 3 4 5 6 7 8 9 +10 +20

||||||||||||||||||||||||

DUMBASS-O-METER

1 2 3 4 5 6 7 8 9 +10 +20

||||||||||||||||||||||||

Try to be a bit more subtle next time. Thanks for playing!

----------------------

0-1-2-3-4-5-6-7-8-9-10

----------------------

^

Better, but no bite. I am uninterested in getting into a flame war with you no matter how many personal attacks you make. If you insist on having a fight, go ahead and start without me.

.----------------------------------------.

[ reeky neighborhood watch Troll-O-Meter]

[---0---1---2---3--4--5--6--7--8---9---]

[||||||||| ]

'----------------------------------------'

Not bad, as trolls go. The Lame-o-meter was off scale on the highest range and the Clue-o-meter wouldn't register.

0 1 2 3 4 5 6 7 8 9 10

_________________________________

| | | | | | | | | | |

---------------------------------

^

|

OMG! They said it couldn't be done, but this post rates:

+--------------------------+

| 4 5 |

| 3 6 |

| 2 7 |

| 1 8 |

| 0 o 9 |

| -1 / |

| -2 / |

| -3 / |

+--------------------------+

| Troll-O-Meter |

'--------------------------'

Warning, troll-o-meters are susceptible to particularly stupid statements, and may give inaccurate readings under such conditions. A shrill response generally indicates an effective complaint.

/_______________________/|

| TROLL-O-METER(tm) ||

| ||

| .--- .--- ||

| | | millitrolls||

| .---' | _ _ ||

| | | . | || | ||

| ---' ' `-'`-' |/

`------------------------'

A bit better, but still not enough to hook me. Better luck on your next cast!

+----------------------+ +----------------------+

|0 1 2 3 4 5 6 7 8 9 10| |0 1 2 3 4 5 6 7 8 9 10|

| \ TROLL-O-METER | | WANK-O-METER / |

| \ | | / |

| \ | | / |

| \ | | / |

| \ | | / |

| \ | | / |

| \ | | / |

|Certifie\ next cal: | |Certified / next cal: |

| NIST \ date 12/5 | | NIST / date 12/5 |

+----------------------+ +----------------------+

*BANG*

That was the Troll-O-Meter exploding.

Fell for that one hook, line, and sinker.

#

;

;

@

+----------------+ ,.'

| .0 .2 .4 .8 1.0| ,.'

| ' |

| ` | ''..''..''%

+------------` |

| Troll-O-Met` (((o

+-----------` '.,

'.,

; #

;

*

_

_____|_|_____

| PLEASE! |

|-------------|

| Do NOT Feed |

| The Troll |

|_____________|

| |

| |

\ \ | | / /

.:\:/:.

+-------------------+ .:\:\:/:/:.

| PLEASE DO NOT | :.:\:\:/:/:.:

| FEED THE TROLLS | :=.' - - '.=:

| | '=(\ 9 9 /)='

| Thank you, | ( (_) )

| Management | /`-vvv-'\

+-------------------+ / \

| | @@@ / /|,,,,,|\ \

| | @@@ /_// /^\ \\_\

@x@@x@ | | |/ WW( ( ) )WW

\||||/ | | \| __\,,\ /,,/__

\||/ | | | jgs (______Y______)

/\/\/\/\/\/\/\/\//\/\\/\/\/\/\/\/\/\/\/\/\/\/\/\/\/\/\/\/\/\/\/\/\

|

|

Send message Joined: 5 Oct 06 Posts: 5149

|

Do grow up.It's trolling if you post assertions on a technical board like this without being willing to back up your assertions with answers to factual questions when asked. What was the year of manufacture of the NVidia card "which broke in under a week"? |

|

Send message Joined: 24 Dec 19 Posts: 241

|

I wouldn't if I was them. It spoils their reputation when the cooler is designed badly. I guess they know what they are doing, both being multi-billion dollar companies and all. The cooler design of an AIB reflects on the AIB, not the chip manufacturer, especially when a reference design doesn’t exist. I've never seen one. Every Intel I've bought has a stock fan. Intel has MANY skus dating all the way back to 2011 for CPUs that we’re shipped without a thermal solution. These are not simply CPUs that can be ordered “OEM”, these are CPUs that we’re never offered with one in the first place. And this is only desktop consumer stuff not even including their server grade stuff. https://www.intel.com/content/www/us/en/support/articles/000005940/processors.html Not in my experience. This whole thread can probably be summed up by these four words. The reality of the world is not always the same as in your own little bubble. A lot of your opinions are based on very old experiences not reflective of reality today. |

|

Send message Joined: 24 Dec 19 Posts: 241

|

AMD cards are less efficient, use more power, run hotter, and have more driver issues than comparable Nvidia cards. Compute performance is highly dependent on the application being used. You have to answer the question of compute for “what”? The 2070 Super is overall faster and more power efficient than a RX5700XT. For Einstein the 5700XT will probably win, as their apps favor AMD cards. For SETI, since there is specialized applications for CUDA, the 2070S will embarrass the 5700XT. It’s very difficult to compare two different cards in compute scenarios, since the wildly different architectures require wildly different applications to run. You can’t really make a true apples to apples comparison. |

|

Send message Joined: 24 Dec 19 Posts: 241

|

That site is exactly what I used to show you the relative performance in the 4 cards listed a few posts back. |

|

Send message Joined: 8 Nov 19 Posts: 718

|

It’s amazing that someone can come to such outrageous conclusions. Nvidia drivers can decide to run OpenCL tasks in CUDA if they want... I've seen it happen. As in reference to the 15%, it's an estimation, based on playing around with WUs and tasks. Nvidia-Xserver only shows increments of roughly 15-20%. It doesn't show accurate results. It's also amazing how you can say someone can have "such outrageous conclusions", while you provide absolutely no proof yourself; not to mention, it's degrading and just plain not nice to say that about someone else (be it me, or anyone on board here). We're all here to learn! |

|

Send message Joined: 8 Nov 19 Posts: 718

|

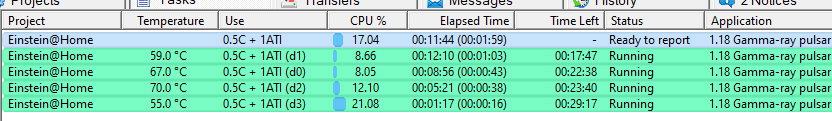

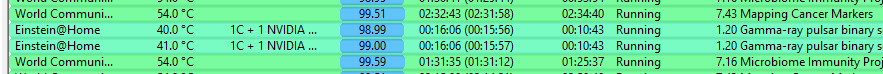

The actual CPU load, Nvidia drivers put on the CPU, can be seen in HTOP. Case scenario below, is just used as an example, not an actual representation of Nvidia driver and CPU load, However, the CPU bars will show quite accurately the actual CPU usage, and the idle data sent from CPU to the GPU:  The green parts of the CPU bars (could be any other assigned color), would reflect actual CPU crunching data that is sent to the GPU, while the red parts of the bar show the idle data. With Nvidia, the red bars will nearly always load to 100%, while the green bars could be anywhere between 1 and 99%. The red bars are just filling up from whatever the green bars do, to 100%. The idle data is just to prevent the system from switching the job to another core, allowing you to enjoy highest performance (as this way, the CPU doesn't need to copy L-Cache from one core to another). This doesn't work with all operating systems, as despite this polling (idle data), some Operating systems, still swap cores around. AMD would show just the green bars, and the red bars (idle data) would be black (unused). This has benefits, in that AMD GPUs tax the CPU less, and thus run the computer more efficiently. But then again, the GPU section is running less efficiently than Nvidia. About idle data being absent on computing with AMD GPUs, I've seen the same thing happen on Nvidia drivers, when CPU cores get shared between GPUs (eg: a 2 core 4 threads, is sharing 4 threads between 3 or 4 GPUs in Linux). Nvidia drivers will disable the idle polling data in that case, due to the fact that at least 1 thread is shared with a core. However, there's a slight performance penalty that comes along with it. No one really has tried to measure, but I think there's a slight possibility the idle data Nvidia drivers send, is also consuming CPU resources, and costing extra watts. Though the tradeoff on most PCs should be small (adding only a few watts for a few percent higher performance). |

|

Send message Joined: 8 Nov 19 Posts: 718

|

FUNCTIONALLY a VRM is a power supply, or even a component within a power supply. My 2ct, a VRM is a Voltage Regulator Module. It regulates the voltage, it doesn't convert it. It takes DC in, and makes sure that the GPU core gets the 12V it needs. This is different from a PSU, which not only converts AC to DC, and changes the voltage/amps, but I'd say that it's safer to say that a PSU is larger than a VRM. A vrm is just a feedback loop controller to make sure voltage and current draw, stay within limits. A VRM is a plain digital chip; and a PSU has capacitors, and digital circuitry inside; thus is more complex than a VRM. I think most people would see a VRM as a controller, rather than a generator of power (a PSU, the old style, were transformer based, and thus generate voltage; though the newer ones are based on digital circuitry, which makes it function much closer to a VRM). My 2 uneducated cents. I don't care if I'm right or wrong about this. This is just what I (to this day) believe. |

|

Send message Joined: 8 Nov 19 Posts: 718

|

Compute performance is highly dependent on the application being used. You have to answer the question of compute for “what”? From reading the forum, one user said the most optimal setting for an RX5700 (XT),I believe was 190W, with overclock. The same performance was done between an RTX 2070 at 137W (slower) and an RTX 2070 Super at 150W (Faster than the 5700). So while AMD may be faster, it also consumes more power. The 40W more power, results in $+40 more on electricity per year. |

Copyright © 2025 University of California.

Permission is granted to copy, distribute and/or modify this document

under the terms of the GNU Free Documentation License,

Version 1.2 or any later version published by the Free Software Foundation.