Thread 'PCI express risers to use multiple GPUs on one motherboard - not detecting card?'

Message boards : GPUs : PCI express risers to use multiple GPUs on one motherboard - not detecting card?

Message board moderation

Previous · 1 . . . 6 · 7 · 8 · 9 · 10 · 11 · 12 . . . 20 · Next

| Author | Message |

|---|---|

|

Send message Joined: 24 Dec 19 Posts: 241

|

besides the fact that wikipedia is editable by anyone... again, STANDARDIZED PC COMPONENTS. that's what is being discussed. a VRM is not a PSU. |

|

Send message Joined: 25 Nov 05 Posts: 1654

|

This "discussion" is getting a bit heated. And drifting from at least the last part of the topic title. |

|

Send message Joined: 24 Dec 19 Posts: 241

|

you could try googling PC VRM and see how many PSUs show up, or vice versa. there's a reason standardized nomenclature exists. |

|

Send message Joined: 25 May 09 Posts: 1335

|

I missed those - some are AC-DC and others are, as you say AC-AC. |

|

Send message Joined: 25 May 09 Posts: 1335

|

FUNCTIONALLY a VRM is a power supply, or even a component within a power supply. |

Dave DaveSend message Joined: 28 Jun 10 Posts: 2897

|

Extraneous information aside, I still disagree that a VRM is a PSU. The lack of AC-DC conversion is a major design element that separates the two. Please don't tell my laptop that the box I connect to the 12V socket in a car isn't a PSU as I would like to keep using it for longer than its internal battery allows when away from mains. |

Keith Myers Keith MyersSend message Joined: 17 Nov 16 Posts: 906

|

but I also suspect you can apply some optimizations (you'd have to inquire at Einstein or MW forums if there any) to make those jobs run faster, at the expense of using more resources. If you are referring to the name of the optimizations for the SETI special application, the one being referenced is -nobs (or no blocking sync) The mechanism that was being used at GPUGrid for the older deprecated applications was called SWAN_SYNC=1 and did the same thing. No longer used there for the current applications. What the parameter does is prevent the cpu thread servicing the gpu task from switching away to do other things when listening for the gpu's request for data. It all depends on how the application developer wrote the science application. Einstein and Milkyway developers wrote their applications differently than what the GPUGrid and SETI developers did. Different goals and such. |

Keith Myers Keith MyersSend message Joined: 17 Nov 16 Posts: 906

|

Maybe in the past with old tasks Einstein used to have more reliance on PCIe, but it does not appear to be the case anymore. Yes, the comment about Einstein gpu tasks needing a lot of PCIE bandwidth was in reference to the old deprecated project BRP4G application. That campaign is long ended many years ago. It did suffer a task slowdown if you dropped the card running on it from X16 to X4 for example. The current MW and Einstein gpu tasks don't seem to work the gpu very hard. Not as hard as Seti gpu tasks for example. I haven't seen any difference in current MW or Einstein gpu applications whether they run at X8 or X4. The task times are always within normal variance. |

Keith Myers Keith MyersSend message Joined: 17 Nov 16 Posts: 906

|

on such a slow system, i think your only shot at running GW would be to run 1 GPU only with 1 GW task and no other CPU tasks running. but maybe not even then. My one dedicated Einstein system is a 2700X and 3 GTX 1070Ti's. Happily runs Gravity Wave cpu tasks on 8 threads plus a task each on each gpu which hop around between Gravity Wave GPU and Gamma Ray GPU tasks. No problem supporting the various work on that machine. It is responsive and crunches tasks with no apparent slowdowns. The gpus are running in X8/X8/X4 PCIE bus widths with the X4 slot dropping to Gen. 2 speeds. |

|

Send message Joined: 24 Dec 19 Posts: 241

|

My one dedicated Einstein system is a 2700X and 3 GTX 1070Ti's. Happily runs Gravity Wave cpu tasks on 8 threads plus a task each on each gpu which hop around between Gravity Wave GPU and Gamma Ray GPU tasks. well you essentially have 8 modern/fast threads free to service 3 GPUs. which is more than enough. the OP is running a very very old 4c/4t chip. and with the amount of CPU resources needed on that app for nvidia cards, it's not worth running more than 1 task on 1 GPU. the GW app likes to overflow into multiple threads even for just GPU support. i've witnessed the GW tasks on the nvidia app requesting 130-150% of a CPU thread, which causes 2 threads to be used, it doesnt just stop with one thread. and that was on a much stronger CPU still. (E5-2600 v2 Xeons) |

|

Send message Joined: 8 Nov 19 Posts: 718

|

I mean to say, some programs use pcie bus data as a source of GPU utilization. But pcie data transfer can not be measured with any close precision, because such measuring sensors don't exist in common PC hardware. At best, an approximation of 15% can be guessed. Boinc is similar to FAH, it will blip a fraction of a second of data, every so often (ten seconds or so) to the GPU via the PCIE bus. PCIE data can be read by Nvidia drivers, but they're woefully inaccurate. Like mentioned down to ~15% tolerance. That means, if a GPU shows 45% PCIE bandwidth utilization, it's anywhere from 35-55%, which is pretty inaccurate. Furthermore, the Nvidia drivers are what pass the info on to any third party programs on Linux. They themselves poll the PCIE bandwidth only once every so many seconds, and cut an average. In the case of Boinc and FAH, this could show 100% and 15% utilization in 2 consecutive readings; or an average reading that's lower than peak PCIE traffic. Overall though, the Nvidia driver info is good enough to show if the PCIE utilization is higher than 80% (which it is on a PCIE 3.0 x1 slot, feeding a 2080 Ti), you can call it a bottleneck. FAH Core 21 is close to a lot of Boinc projects (including einstein, the Collatz, Prime and GPU grid....) Core 22 uses up a lot more PCIE bandwidth (2-4x more). I would suspect that each cluster of data is chopped up more, and the CPU does more copy commands to the GPU, in order to keep all the cores active as much as possible. But for Boinc, generally: PCIE 2.0 x1 < GT1030 graphics cards Windows 2.0 x4 , or 3.0 x1 <GTX 1060 graphics cards in Windows 3.0 x1 <RTX 2060 Super (on Linux) 3.0 x4 <RTX 2080 Ti on Linux or <2060 Super on Windows 3.0 x8 <RTX 2080 Ti on Windows I wanted to buy another 2080 Ti, but I guess I'll just wait for the 3000 series GPUs to come out, and hope they won't be much more expensive than the 2080 Ti. |

Joseph Stateson Joseph StatesonSend message Joined: 27 Jun 08 Posts: 642

|

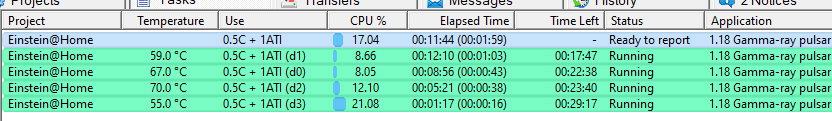

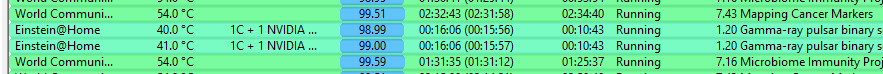

You mention gravity and also gamma. I quit doing gravity due to low credit and problems with drivers on older boards. With "weak" CPU, the gamma ray pulsar seems to run nicely on ATI boards, not so on NVidia. This was discussed over at Einstein and it seems to be the way the polling is implemented as well as the hardware. Following is a Linux system with a really cheap 2 core Celeron G1840. Motherboard is Hl61BTC I got down from the attic and put a pair of RX560 and a pair of RX570 The 11 minute 44 sec completion is typical for the RX570, not obvious from the picture, but the RX560 typical is 25 - 29 minutes. Note the CPU usage is at most %22 which works out nicely for the celeron.  On the other hand, the nvidia board uses up a full cpu. Both systems run 18.04  |

|

Send message Joined: 24 Dec 19 Posts: 241

|

Boinc is similar to FAH, it will blip a fraction of a second of data, every so often (ten seconds or so) to the GPU via the PCIE bus. "BOINC" does nothing of the sort. BOINC doesn't use the GPU, the science application does. and how it utilizes resources is entirely up to how that application was written. The Einstein GPU apps use system resources entirely different than the CUDA special application at SETI as an example, but both run on the BOINC platform. I've measured PCIe resources being used on both of these apps, and SETI CUDA special app so far is the only one with a constant stream of data being sent over the PCIe bus for the entire duration of the WU run. using roughly 600MB/s of the bandwidth all. the. time. Einstein almost uses none, only like 1% used. can you provide a source from nvidia that claims PCIe bandwidth is only accurate to 15%? or is that number purely speculative? I have not found anything like that in their documentation. |

Copyright © 2025 University of California.

Permission is granted to copy, distribute and/or modify this document

under the terms of the GNU Free Documentation License,

Version 1.2 or any later version published by the Free Software Foundation.