Resource Share, what if?

Message boards :

Questions and problems :

Resource Share, what if?

Message board moderation

| Author | Message |

|---|---|

|

Send message Joined: 15 Jun 18 Posts: 12

|

Hi, Theoretically, If I suspend a project which has like 200 share of the resource, do these resources automatically get redistributed among the projects I'm currently running? Or ... Do I need to go and modify the preferences of the project I suspended to 0 (zero) to have the resources practically get redistributed among other projects? Regards, O&O

|

|

Send message Joined: 5 Oct 06 Posts: 5080

|

Yes. No. Edit - that was a bit blunt ;-) A resource share of zero has a special, different, meaning: it makes that project a 'backup' project - work is only fetched, one task at a time, if no work is available from any other project and the computer is at risk of becoming idle within three minutes. Only use it with that meaning in mind - so it makes no sense when the project is suspended anyway. |

|

Send message Joined: 15 Jun 18 Posts: 12

|

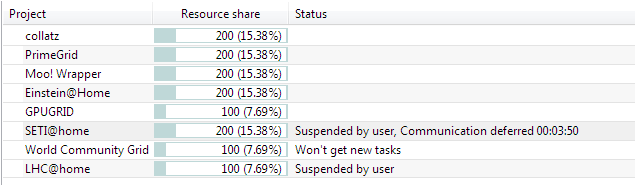

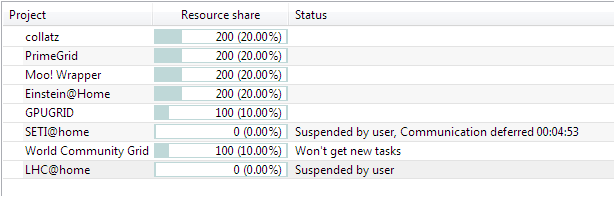

Well,... I tried it and .. Unsure, because the percentage of resources stays as is under BOINC for the remaining running projects. Yes, setting the preference of the suspended project to 0, made the percentages of the other resources to increase in BOINC. So,...

|

|

Send message Joined: 5 Oct 06 Posts: 5080

|

Where did you look? Some of the Event Log options - like <Work Fetch debug> - give second-by-second data about the share, for each resource, taking into account all the current backoffs, suspensions, preferences, etc. |

|

Send message Joined: 15 Jun 18 Posts: 12

|

BOINC Client>Advanced view>Projects>Resource Share (column) Notice the resource share percentages .. Before...  After ...

|

|

Send message Joined: 5 Oct 06 Posts: 5080

|

It's still there, waiting for you to use it, but operationally it won't play any part. 13/07/2018 08:56:46 | | [work_fetch] --- state for CPU ---That's a fetch share of 1 (==100%) for just one project in each resource section. The same would apply to runtime allocation. |

|

Send message Joined: 15 Jun 18 Posts: 12

|

@Richard .. Did you suspend all your projects, edited the preference resources to zero for each project and then updated all the projects and .... found that , at least, one SETI task was running ? Why a SETI?

|

|

Send message Joined: 5 Oct 06 Posts: 5080

|

I tend to use 'no new tasks' rather than 'suspend' - many of them are projects which I have attached to briefly to run one or two test tasks to explore a problem, but not felt attracted into a long-term relationship. If I did find an unexpected task running, I would read the Event Log in detail to try to understand the circumstances, and report a bug if I found one. It's often easiest to do that by opening the event log archive file 'stdoutdae.txt', and using the 'find' facility in your text editor. The full life-cycle for a task looks like 11-Jul-2018 21:16:03 [SETI@home] [sched_op] Starting scheduler requestTo track down what happened, it's important to find that original 'Sending scheduler request: To fetch work.' that triggered the download. It's a common misconception that projects "send" work: they can't. They only respond to requests for work (if the project initiated the call, it wouldn't get through your firewall). Look back over what you were doing around the time that task was downloaded. |

Copyright © 2024 University of California.

Permission is granted to copy, distribute and/or modify this document

under the terms of the GNU Free Documentation License,

Version 1.2 or any later version published by the Free Software Foundation.